![]() Last month, I discussed using Docker containers because they increase confidence in our deployed infrastructure. Now that we’ve decided to use this new tool, we need to learn how to use it well. After spending some more time with containers, I’d like to dive deeper into their benefits and the process of building (and rebuilding them).

Last month, I discussed using Docker containers because they increase confidence in our deployed infrastructure. Now that we’ve decided to use this new tool, we need to learn how to use it well. After spending some more time with containers, I’d like to dive deeper into their benefits and the process of building (and rebuilding them).

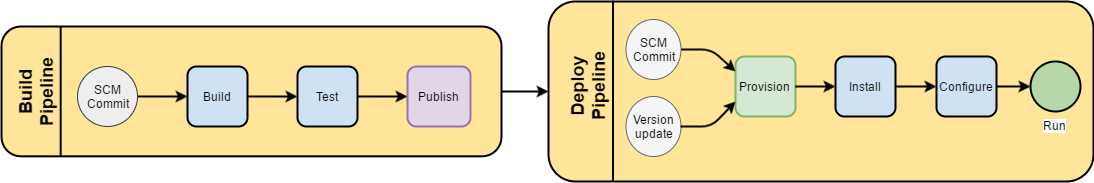

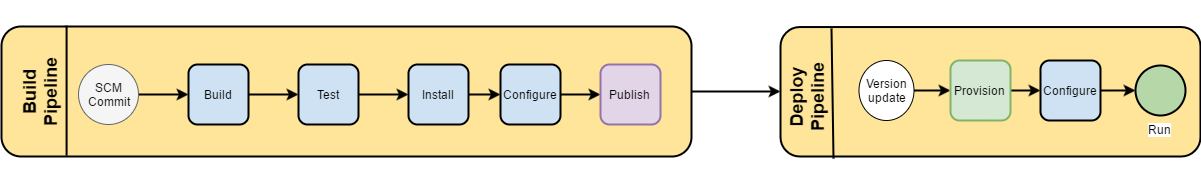

In our standard workflow, we would have a repository of source code, a command to build it, and a command to publish some resultant artifact. In a typical java project, that might mean producing a .war file for a web service. For a stand alone application, we might produce a binary or even an installable package (like an .rpm). In Docker, we’ll need to go a couple steps further. We need to produce a launchable container with that installed asset and then integrate that with other elements of the infrastructure.

Many of us already have these steps in some form, whether automated or not. With Docker builds, we are just moving them earlier in the process. Instead of a .war file, we’ll be making a Docker container with java and tomcat installed with the application .war configured in it. If we have infrastructure code that installs a messaging service (like Kafka), then we put that inside a Docker container in our build pipeline, as opposed to running that infrastructure code directly in the environment.

Why do we even want a Docker Container Workflow?

As always in Software Engineering, we need to be able to make any change to this build process, and review and test it, so our build process needs to be easy and automated.There are clear benefits to a Docker-based workflow for the majority of our production application stacks. They include the following:

We can produce a build artifact containing more of our infrastructure

When we want to run an application, we rarely are just running the application. The program depends on other binaries and configuration even locally. We gain confidence by testing what we put in production, and if that system better emulates the exact thing in production, then we are just that much more confident. We could promote containers as opposed to the traditional method of deploying a build artifact (e.g. a .war).

VS

Less vendor lock-in

For years people have seen the benefit of building images, but they are delivering whole operating systems. They are usually a specific format like AWS’s AMIs or VMWARE’s VMDKs. Docker artifacts are now more more transportable (both in size and run location). Thinking this through, we can now change entire operating systems more easily. If you are hosting web services for different customers, this can be a huge benefit when one customer is in AWS (EC2), another is in Microsoft’s Azure, and another is in a private data center.

Separating out OS security upgrades

We can separate our infrastructure upgrades (which are now in Docker Containers) from our OS security upgrades. Distributions like RHEL are nice because they do a lot of work for us, but sometimes we must upgrade one our core packages to support an OS upgrade. No longer are we being forced to upgrade apache because we upgraded Ubuntu.

Bring the Build Team Closer to Developers

So far we’ve mostly been talking about the production environment side of things, but that is not the only side that has trouble replicating environments. If we build in Docker containers, we can share our build environments so developers, and the build team can build on the same configuration.

“Works on my machine” – Every Developer (1963-present)

Dockerfile Best Practices

So the first thing we need to understand is that Docker stores its images as series of layers. If we translate our build steps straight to a list of Dockerfile commands we might end up with this:

FROM centos MAINTAINER Jon Malachowski "[email protected]" RUN yum install epel-release -y RUN yum install java kafka daemonize -y COPY docker-cmd / COPY kafka_config_service.properties $kafka_basepath/config/server.properties EXPOSE 2181 9092 CMD /docker-cmd

In this Dockerfile, we have a few problems:

- Multiple RUN linesMultiple RUN lines create multiple layers which we don’t actually need. This can lead to a bloated image and unnecessary complexity. So, the first recommendation from Docker’s best practices is to use bash’s && to put these together in one line. Our other option is use a tool to squash the layers together afterwards.

- Cruft

Using yum/apt-get to install packages works, but they leave behind a lot of cache that we try to minimize in Docker containers. Most package managers have commands for cleaning this up.RUN yum install epel-release -y && yum install java kafka daemonize -y && yum clean all - Too Much Bash

As our container setups get more complicated, we must realize that bash is a mediocre language for organizing infrastructure. There is a set of languages specifically developed for installing and configuring infrastructure. So, can we use Chef/Ansible to build Docker contains well? ( In case it wasn’t obvious, YES! )

User Tip: As a digital neat-freak, I don’t like dozens of older Docker processes and old Docker images hanging around. It won’t be too long before you too will want a quick way of cleaning up. Try the following:

- To clean out old processes:

docker rm $(docker ps -qa --no-trunc --filter "status=exited") - To clean out old images:

docker rmi $(docker images --filter "dangling=true" -q --no-trunc)

Using Ansible to Build Docker Containers

To use Ansible/Chef to build an image generally, we would have to either have sshd running (which is not the Docker way™), or we’d have to run it from inside the container. To run from inside the container we would pre-install our tooling on a base image that our Dockerfile with inherit from. This simplifies our Dockerfile, and pulls out the meat of the actual Docker construction to your configuration management language of choice.

FROM jmalacho/base

MAINTAINER Jon Malachowski “[email protected]”RUN git clone –depth 1 https://github.com/jmalacho/kafka.git /ansible && \

ansible-playbook -v /ansible/install.yml && \

rm -rf /ansibleEXPOSE 2181 9092

CMD /docker-cmd

This is better software organization because it moves all our changes into an Ansible role, but it actually moves us a step backwards in terms of bloating our Docker images with extra software. We’ve just had to pre-install build tools in our container that we don’t actually need to run them (right after I spoke about how we are trying to make smaller containers ). We need a way for Ansible to reach into the machine to install software without having the bloat of sshd nor Ansible installed in the container. At the end of the day, we need a Docker-aware tool if we want to be smart about this. Chef 1 and Ansible both have them for Docker. I’ll be talking about the Ansible one next month.

XOXO,

Jonathan Malachowski

One thought to “Docker Containers as Continuous Integration Artifacts”

OT –

When it comes to layering – I *do* want that for prototyping since it speeds things up.

For the published container obviously I want those squashed, but this doesn’t seem like something docker makes super easy ?